Watch Demo YouTube Video

Watch Demo YouTube Video

Optimized Airtable Bulk Data Workflow

This workflow is specifically designed to address the challenges of upserting or inserting large volumes of data into Airtable. By leveraging the Airtable Batch API, it delivers up to 9X faster performance compared to standard data insertion methods, making it an indispensable tool for high-demand data operations.

Key Features

• Accelerated Data Processing:

Utilize the Airtable Batch API to perform bulk operations swiftly and efficiently.

• Seamless Workflow Integration:

Easily integrate this sub-processor into any n8n workflow that requires Airtable updates, ensuring smooth data synchronization across multiple processes.

• Enhanced Reliability and Scalability:

Designed to handle extensive datasets, this solution is perfect for real-time updates, database migrations, and continuous data syncing without performance degradation.

Setup Instructions

- Add the Sub-Workflow:

Import this workflow to your n8n workflows, then add it as a sub-workflow call in other workflows requiring a lot of Airtable updates. - Configure Sub-Worflow variables:

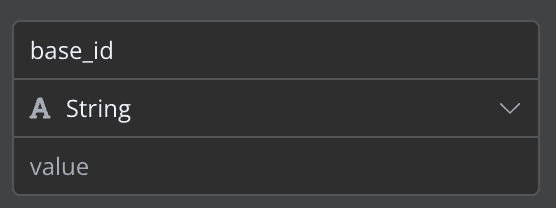

"set_Batching_vars" SET Node

• Obtain the correct Base ID and Table ID, and insert in the "set_Batching_vars" SET Node.

• Add or select the correct Airtable credentials in both Airtable Upsert & Insert HTTP nodes in the sub-workflow.

• Ensure the API permissions are set correctly to allow data insertion/upsertion. - Adjust Batch Settings:

"set_Batching_vars" SET Node

• In the same "set_Batching_vars" SET Node, put the field name in the "merge_on" field if you wish to upsert record, otherwise, keep it empty for insertion.

• Correctly setup the fields you want to insert/upsert in the 'record' field.

- Test the Integration:

Run a small-scale test to ensure that data is correctly processed and inserted/upserted into Airtable.

Use Case Scenarios

• Bulk Data Insertion:

Efficiently insert large datasets into Airtable, perfect for initial data migrations or periodic data updates.

• Real-Time Data Upsertion:

Keep your Airtable records current by integrating this workflow with your live data pipelines.

• Database Migrations & Synchronization:

Seamlessly transfer data between databases and Airtable, ensuring minimal downtime and data integrity.

Specific Requirements for Airtable Integration

• Airtable Account:

You must have an active Airtable account with appropriate permissions to modify the target base.

• API Credentials:

Secure a valid Airtable API connection and ensure you have the correct Base ID and Table ID for the target data store.

By integrating this workflow into your system, you can significantly improve the efficiency of your Airtable operations, reducing processing time and enabling smoother data management at scale.